Quantized YOLO for Edge Solutions

Improving quantized state-of-the-art object detection methods

In the previous article, we discussed how we set up pigeon detection on an edge device. We took for granted the existence of a quantized model that can be deployed. This is not a straightforward method, let's discuss further in this article how to achieve deployable models. If you have not read the previous article, please read it here: Pigeons On the Edge

Quantization

Quantization is the process of decreasing the computational precision of a model to a lower datatype, e.g. FP32 (floating-point) to INT8. It is strongly dependent on the hardware, which datatype you should prefer while quantizing. In this article, we will mainly focus on INT8 for the following reasons:

Coral Ai Edge TPU only supports INT8 operations

NXP iMX8 Plus is more efficient in INT8 precision and consumes less power

Newer Intel CPUs have dedicated INT8 co-processors and instruction sets i.e. Intel® Advanced Matrix Extensions, which can do 2048 INT8 operations per cycle

What do we expect from a quantized model on dedicated hardware?

Efficient execution in both performance and power consumption

Decreased model size:

Coral Ai Edge TPU has only 8MB of cache

Newer Large Language Models are even quantized to INT4 to be able to fit into consumer GPU hardware

Decreased model accuracy, due to lower computation precision

How to quantize a model?

Post-training quantization, where an already trained model is quantized. Each edge device normally has its quantizer to deploy models.

Quantization aware training, where during training not only the model accuracy but also the quantization factors are optimized, which increases the complexity of training and deployment. If necessary, activation functions can also be modified according to hardware requirements.

How does quantization work?

Scaled Integers, where real numbers are represented as integers multiplied by a factor and shifted with a bias

Lookup table (LUT), complex functions like sigmoid and softmax can be precomputed and their outputs can be stored

When these methods are applied a representative dataset is required

There exist other techniques, but these are the most common to be applied

Deploying YOLO

Now that we have a better overview of quantization let's try to quantize the YOLO model. To continue, we need to have a high-level understanding of the YOLO model:

Backbone, which is a convolutional neural network and outputs a feature pyramid

Head #1, where the last 3 layers of the pyramid are upscaled and convolved

Head #2, where the Detection layer is executed

Head #3, where the scores and bounding box coordinates are computed

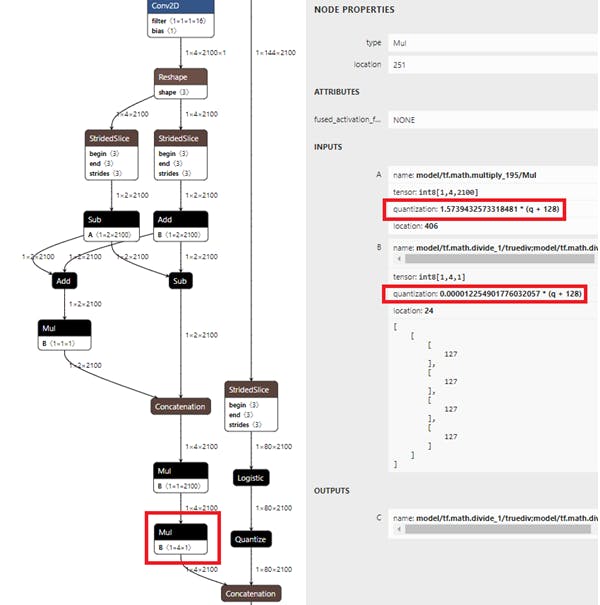

Fixing the Head #3 of YOLO

When applying INT8 quantization on the YOLO7 model, the output values were corrupted. While analyzing the code, it was observed that pixel coordinates and the class score values are not in the same range, therefore quantization collapses. After applying normalization on the coordinate values by image size, a more reasonable output was visible, but still, some values were wrong. Models with large input image sizes were still suffering from quantization collapse. After analyzing the model quantization parameters (factor, bias) it was found that a large numerical instability is present. This was further improved by using normalized scaling factors instead of normalizing output values by the image size. Precomputing the scaling factors is possible because we quantize static models, meaning the input size must be always the same and cannot change during inference.

As the next step, YOLO8 was tested, where the same numerical instability was visible. Changing here to use also normalized scaling factors instead of normalizing by image size solved a bug and it further improved the model precision by 4% compared to the previously reported values.

We can observe this on the first image, where normalizing with image size results in a factor difference of 10^5.

Where on the second image we see that using normalized scaling factors for bounding box calculation our quantization factor difference is only 10^2.

| Model | mAP50-95 | Note |

| yolov8n FP32 | 37.3 | Unquantized |

| yolov8n INT8 Main | 8.1 | Bug in repo |

| yolov8n INT8 Claimed | 28.7 | Claimed results |

| yolov8n INT8 Fixed | 32.9 | Fixed results |

Should quantization be applied to Head #3 at all?

Tests have shown that if the last mathematical operations are excluded from the quantization the precision of the model increases, while the processing time is barely increasing. On a device with only INT8 operations, this would mean that the final steps need to be executed on the CPU, if FP32 instructions are available. This would also mean that normalization is not necessary and the previous discussion would be irrelevant. Detaching the head is at the moment rather cumbersome to implement for TFLite quantization (TPU, NXP) and only can be achieved with dirty hacks.

For Intel CPUs, this is a different scenario as operations can fall back on the CPU to be executed in FP32 precision. OpenVINO quantizer supports such changes by explicitly defining which operations should be excluded from the quantization process. Due to this freedom, normalizing is not necessary as the quantizer implicitly excludes operations if quantization collapses. After careful testing to exclude the whole Head #3, the precision of the model has been improved by 1.4% while the average inference speed only increased by 0.3% on an Intel 9th Gen CPU.

| Model | mAP50-95 | Inference | Note |

| yolov8n FP32 | 37.3 | PyTorch | Unquantized |

| yolov8n INT8 | 32.9 | TFLite (TPU, NXP) | Fixed results |

| yolov8n INT8 + FP32 | 35.2 | TFLite (TPU, NXP) | Detached Head #3 |

| yolov8n INT8 | 35.7 | OpenVINO (Intel) | Main branch |

| yolov8n INT8 + FP32 | 37.1 | OpenVINO (Intel) | Improved results |

NOTE: OpenVINO applies per-channel quantization, while TFLite can be switched between per-tensor or per-channel. Per-tensor has one factor and bias, while per-channel has for each channel a factor and bias.

| Model | mAP50-95 | Inference | Note |

| yolov8n INT8 | 32.9 | TFLite (TPU, NXP) | per-tensor |

| yolov8n INT8 | 33.9 | TFLite (TPU, NXP) | per-channel |

| yolov8n INT8 + FP32 | 35.2 | TFLite (TPU, NXP) | per-tensor |

| yolov8n INT8 + FP32 | 36.3 | TFLite (TPU, NXP) | per-channel |

Activation Functions on Edge Devices

Since some of the selected devices have restricted instruction sets, different activation functions are needed for deployed models.

LeakyReLU

YOLOv7-Tiny is trained with LeakyReLU

Coral Ai Edge TPU does not support LeakyReLU

NXP output is corrupt using LeakyReLU

Intel works with LeakyReLU

SiLU

YOLOv8n is trained with SiLU

Coral Ai Edge TPU crash using SiLU

Intel works with SiLU

ReLU6

ReLU6 achieves lower mAP after training for both YOLO7 and YOLO8

ReLU6 has less accuracy drop after quantization

ReLU6 works both on Coral Ede TPU and NXP

| Model | mAP50-95 SiLU | mAP50-95 ReLU6 |

| yolov8n F32 | 37.4 | 34.0 |

| yolov8n INT8 | 33.9 | 31.4 |

| yolov8n INT8 + FP32 | 36.3 | 33.9 |

Final Thoughts

Quantization is a hardware-dependent task. To achieve the best results, one should understand both the AI model and the hardware to be deployed. The changes about TFLite and OpenVINO mentioned in this article regarding YOLO8 have been merged into the main repository.