Easy Azure Architecture for B2B Web Applications

Table of contents

- Introduction to the Easy Architecture

- Easy Architecture Walkthrough

- 1.) Unauthenticated User requests the SPA

- 2.) SSO

- 3.) Authenticated User requests the SPA

- 4.) SPA calls APIM

- Considerations on networking

- A.) Calls from APIM to Backends

- B.) Calls from Backends to On-Prem through APIM

- C.) Calls from App Services to ACR and Key Vault

- D.) Calls from Backend to Azure Storage and Database

- Why not use Service X or architecture Z?

Introduction to the Easy Architecture

In this post, we will explore a quick and rather easy architecture for deploying your web applications with a low to medium complexity to Azure.

The used components are:

- Azure App Services

- With App Service integrated Authentication EasyAuth

- Azure API Management APIM

- Azure Container Registry ACR

- Azure Key Vault

Additionally, you typically integrate a database of your choice, an SSO provider for authentication (in our case Azure Active Directory (AAD) in conjunction with Azure Active Directory B2C (AADB2C), and optionally Azure Storage for file or blob storage.

Discussion on why these components were selected over other similar ones is left for the last part of this post.

Easy Architecture Walkthrough

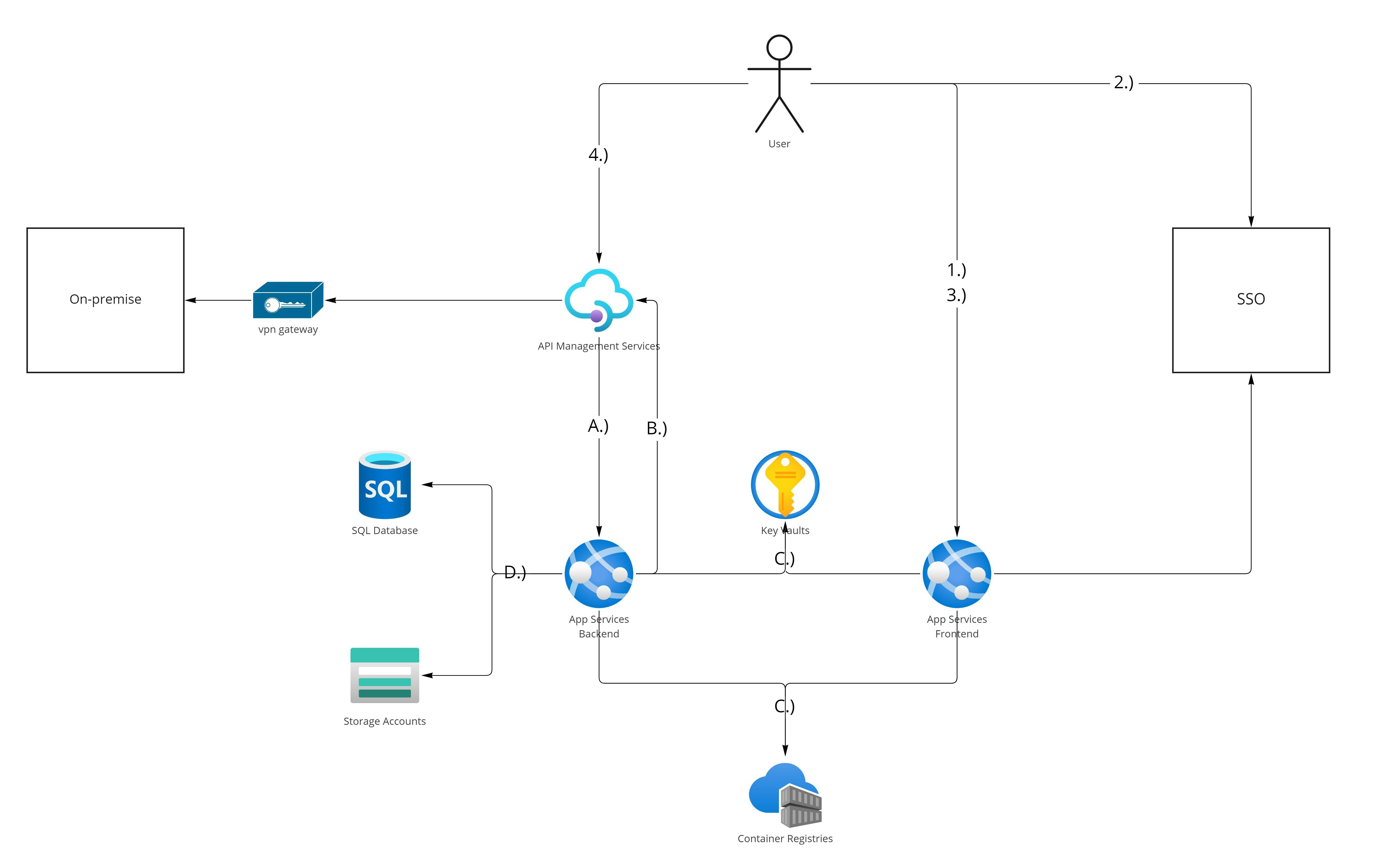

Without further ado, here is the proposed architecture and I will walk you through it step by step:

1.) Unauthenticated User requests the SPA

Now here's the thing: Notice how the blog post title says B2B Web Applications. For most publicly available websites, a typical user flow for authentication would look like this:

- User loads the SPA

- SPA checks if user is logged in and if not shows the login screen

- SPA handles login of the user (e.g. using Authorization Code Flow with PKCE)

For B2B Web Applications this is often an undesireable scenario, since the bundle itself can contain sensitive information (such as API calls) revealing information about the intent of your application, contained business entites, but also potentially full business processes. Our chosen approach will not serve anything to a user unless they authenticate first. Azure App Service provides a middleware called Easy Auth which can achieve this with little configuration. The user has a session on the AppService and unless authentication has been successful before, it will redirect to SSO. It is an Azure (and Azure App Service) specific lightweight implementation of what e.g. Oauth2-Proxy does.

2.) SSO

We will not go into too much detail here. Whatever SSO / IdP you choose, configuration will be slightly different. You will always want an Object representing the application with a clientId and secret, which you configure AppService with. Easy Auth uses a Hybrid Flow, which will send you an id_token with information about the user in the redirect to the appservice (so quite similar to an implicit flow) but will fetch access & refresh tokens through a backchannel following an Authorization Code Flow. So make sure implicit flows are allowed for the Application / Client on your SSO side for things to work properly.

3.) Authenticated User requests the SPA

After successful authentication, Azure App Service will allow the request to proceed. Now how you serve the SPA is up to you, an obvious choice would be a plain nginx server returning the bundle.

Retrieving credentials for use on backend calls by the SPA

Easy Auth provides two endpoints as GET on the App Service serving the frontend:

- /.auth/me will return a json document containing id_token, access_token and refresh_token (which you don't need) as well as other metadata by App Service

- /.auth/refresh which will exchange the refresh token stored by the appservice against the SSO for a fresh set of access and refresh tokens

With these two endpoints your SPA has all the tools it needs to acquire valid tokens for use against backend / APIM calls, or get new access and refresh tokens as needed.

Important information regarding sessions & refresh tokens

App Service sessions and refresh tokens are two different decoupled things and sadly App Service does not care about the lifetime of your refresh tokens. This means you can end up in an inconsistent state if:

- Your App Service session is still valid

- Your refresh token is expired

Calling the /.auth/refresh endpoint will result in a 403 from that point on and reloading the page will simply cause the bundle to reload and try to refresh the tokens, which fails. The only way out is to wait for your session to expire, or to clear your cookies, which both of course are no good options. The default session duration for AppService is 24 hours at the time of writing, which is (in my opinion) too long for refresh tokens to be valid.

In order to not be caught in this loop, you will need to adjust the session duration of App Service to be smaller or equal to your refresh token duration. You can achieve this by setting a property on the subscriptions/{subscription-name}/resourceGroups/{resource-group-name}/providers/Microsoft.Web/sites/{your-appservice-name}/config/authsettingsV2 resource either through an ARM template or through Azure Resource Explorer. Look for the "cookieExpiration.timeToExpiration" value and change it to an appropriate value (e.g. 1 hour and 59 minutes for the App Service session if your refresh tokens have an expiration of 2 hours, just to avoid corner-cases of refresh failures).

4.) SPA calls APIM

This is again straightforward: By including a valid access token the SPA may access APIs published on APIM. We suggest that your API will validate JWT claims which allow access to the app in general, while the backend behind APIM will validate access and fine-grained permissions. APIM can validate JWTs by using the validate-jwt policy. The presence of the policy (and to not accidentally configure APIM to be accessible by unauthenticated users has to be verified either manually or through automation, but stay tuned for a future blog post concerning best practices for (shared, because quite expensive) APIM instances.

Considerations on networking

API Management services can serve multiple purposes, but for us the key drivers were discoverability of APIs and a central point for applying governance. To achieve this goal, you typically want to have one (or very few) shared APIM instances (additionally, APIM instances are also very expensive), which are typically placed in shared subscriptions / networks and in the responsibility of multiple application development teams (Which often form a shared infrastructure team). In order to reduce dependencies between application development teams and APIM teams, our architecture does not require tying App Services and APIM instance together by SDN terms (e.g. Private Endpoints). Instead, every App Service instance and the APIM instance are publicly avaibable, but also have a static IP for outbound traffic, which is used for IP whitelisting as required. With that in mind, let's go over what happens "behind" APIM.

A.) Calls from APIM to Backends

App Services hosting backends have a public IP address (you can also use the microsoft assigned domain names just fine - as it's background traffic only - to avoid handling DNS and SSL tasks). To force traffic through APIM, we place an Access Restriction on the App Service to allow the APIM IP only. We achieve the same result (while maybe being slightly more exposed on a DoS side, but that's just me guessing) as having everything routed privately without any networking hassle.

B.) Calls from Backends to On-Prem through APIM

You can also securely expose On-Prem systems through APIM by whitelisting access to APIs which route to On-Prem for App Service IPs where Backends are hosted only. By using App Service vnet integration in conjunction with a fixed-IP NAT Gateway you can force all traffic originating from your App Services through a fixed IP, which you can whitelist. To successfully access On-Premise systems you therefore need to have:

- A specific IP assigned to one or multiple of your backends verified by the ip-filter policy

- A valid JWT with permissions to access the API verified by the validate-jwt policy This is arguably again the same result as having everything tied together in one SDN with less hassle, as you dont need two parties (one with control of the shared APIM subscription and one with control of the application subscription). A breach is only possible by penetrating your App Service instance (which can be accessed only via specific APIs on APIM as explained in A.)).

C.) Calls from App Services to ACR and Key Vault

In order to pull container images and load secrets securely, your App Service instances need access to these services. While network integration exists, it complicates things, as you typically also have other services outside of an Azure ecosystem (e.g. agents running your deployment pipelines).

- For pulling an image by App Service from ACR we use a (User Assigned) managed identity, for pushing images we use a service account provisioned in Azure AD with appropriate access (and e.g. certificate authentication). We consider this approach secure enough to not hide the ACR behind networking boundaries.

- For reading Secrets from Key Vault the App Service can make use of the same managed identity. Again, we consider this secure enough to not require networking integration.

D.) Calls from Backend to Azure Storage and Database

For Azure Storage and the Database (PostgreSQL in our case) we have private link integration to our backend as this is the only service needing both on a regular basis and deny all traffic on the public endpoints. For manual debugging we add access exceptions as needed (e.g. allow your IP on the Database server for the time being if you have to connect, remove the exemption otherwise).

Why not use Service X or architecture Z?

Azure offers a ton of services. Way too many to be an expert on every one of them. We evaluated most options to run a Web Application the way we do it (which is using a Java / SpringBoot + Angular stack) and decided to use App Services for most simple to moderately complex scenarios, and Azure Kubernetes Service for everything that is more sophisticated.

App Services offer a quick way to get your applications running (containerized or not), have DNS + SSL + Authentication out of the box, good monitoring tools (at a cost, but easy to use) and do require less extensive knowledge to operate successfully and safely (unlike the aforementioned AKS). In today's market where knowledgeable IT employees are highly sought after, this is a clear advantage over more complex architectures requiring not only knowledge of how to deploy your applications to e.g. Kubernetes, but also require knowledge on how to safely operate your infrastructure (Yes, AKS helps, but is no magic 0-maintenance solution - there is a lot which can go wrong during a typical cluster lifecycle). At the end of the day, most IT affine people will have a basic understanding of IP whitelisting and setting up authentication, which should be enough to design and operate our proposed Architecture safely - this puts the "Easy" in our title.

If you want to discuss this blog post or discover how we can help you with developing and deploying your software to Microsoft Azure use the comment section, feel free to drop me a mail anytime to vincenzo.sessa@cloudflight.io or add me on LinkedIn, thanks for reading.